Design for editing storage on a budget

Any videographer is familiar with the struggles that follow a video shoot: how and where to store the recordings.

Digital video sizes can range anywhere from 1 to 100 GB for smartphone social media style captures, to multi day productions that can turn over as much as 5, 7 or 10 TB on completion.

Video production houses have the additional challenge of maintaining tens or hundreds of these shoots on their systems, not only during the process of editing, but for sometimes years afterwards depending on contractual agreements.

For one of my clients, video project files are being cooked and stirred in the pot for about a month, but the assets will be recalled many times in the future for repackaging in a different social format, or reuse as additional footage in another related project.

Projects themselves are quite numerous as well, and serve tens of different upstream clients.

A NAS server is the natural platform of choice for this set of needs.

It becomes a single aggregated volume, more durable than a single physical disk of course, and accessible from different hosts with user accounts and permissions and all sorts of fun benefits.

A QNAP system (TS-873) was used as the centrepiece of this client’s storage infrastructure for many years.

Its 8 bays were fitted with 10TB 7200RPM spinning rust and configured at the time for RAID 5 (yes raid five), a usable capacity of 60~70 TB. It had an AMD 4-core 2.1GHz processor, 8GB of DDR4, and ran QTS 5. Later in its life it was also fitted with a dual 10GBASE-T adapter.

On paper, you could measure read throughput of 1.92 GB/s and write throughput of 480 MB/s, but in reality, the throughput to each Mac client over Samba was always lower and trending toward insufficient. The demands of about 3 concurrent video editors saw the system hit CPU utilisation in the 90s.

It was time for an upgrade, so let’s do it.

New deployment

The system design was created by me in August 2024 and deployed successfully in June 2025.

Let’s break down the client’s needs from the system.

System needs

- 2~3 concurrent video editors (streaming all the time)

- 4~6 occasional users (occasional file lookups and writes)

- Streams of video made up of mixed bag of ProRes, BRAW, XAVC formats (all file-per-clip), in usually 3840x2160 resolution - between 25 ~ 100 MB/s per stream

- Existing dataset of about 50 TB

- Generating new data of about ~25 TB per year

- Commitment policy to their upstream clients to retain shoot data for 24 months

- Filesystem storage to be File-based

- Filesystem access protocol to be SMB (Samba), as team members are using Mac and Windows devices, part-owned and part-BYO

- Remote access to storage from authenticated users working from home

- Variety in hot and cold projects, so tending toward a single volume with no manual tiering

Observations

The throughput demand based on the above should be around 500-700 MB/s coming straight off disk, so we don’t necessarily need to have flash.

But we do need to let the client keep any and all content nearby at their ease, and with ~25 TB generated each year from video shoots, the capacity ceiling needs to be high.

So as priorities, let’s set capacity 1 and throughput 2.

Of course cost though… let’s adjust that to cost 1, capacity 2, throughput 3.

Let’s say we move to at least 10-wide and a more durable choice of 2 parity disks (RAID6/60 or raidz2).

We could also plan to facilitate some extra throughput for replayed file sections through a RAM cache (e.g. ZFS ARC).

Those disks should be as high in capacity as possible, between 16~24 TB, according to cost and availability at time of purchase.

Networking wise, let’s have a 10G backbone for at least 8 ‘fast’ clients and support every other user as 1G.

Shopping for server

Vendors wise, we have plenty but some are pricier than others. QNAP, Synology, OWC, EditShare.

OS/software layer wise and their corresponding filesystem base, we have QTS and QuTS (ZFS), Synology DSM (BRTFS), OWC Jellyfish OS (ZFS), EditShare (proprietary EFS), TrueNAS SCALE (ZFS).

Though I want to discuss technology and not price in this blog post, I will give some synthetic numbers:

- QNAP 12/16 bay desktop unit with network accessories - A$6,000

- Disks 18TB 7200RPM - ~A$650 per unit = A$7,800

- EditShare EFS200 with license but excluding support - A$30,000

- Dell PowerVault SAN … 5~6 digits of A$

So there is a QNAP tier, an EditShare tier and a Dell tier.

If all we need is 12 disks and ZFS and SMB access…

And we’re willing to compromise on turnkey and support…

Why not used, performant 2U server with TrueNAS SCALE?

And balance new drives with factory recertified drives.

Shopping for networking

There is a need for 3 video editors and 6 occasional users.

Ideally we keep the topology simple and use a single core switch.

However, the clients on 10G need to connect over copper (10GBASE-T, RJ45).

Laptops of any kind, Mac Studios with custom BTO 10G internal NIC,… the widest reaching interface type is indeed 10GBASE-T, since you can buy them somewhat affordably at like ~A$400.

And 2.5GBASE-T USB NICs even as low as A$40!

The options for USB or Thunderbolt-based optical NICs are… very limited, and where they exist they are pricey. (Sonnet SFP+ 10G Thunderbolt 3 is A$739).

So a switch of some description with plentiful 10G RJ45 is needed here.

In January 2024, Mikrotik gets us 12x 10G (8xRJ45 & 4xCombo SFP+) - the CRS312-4C+8XG-RM.

Let’s put in a 24x 1G switch (Cisco SG200-26) to facilitate any popup clients and other servers that only require 1G.

If I had the time again, I would not use either of these components. The SG200-26 sadly lacks 10G SFP+ uplink and the CRS312-4C-8XG-RM fills up quickly.

I’d favour keeping all of the clients and servers on the same core switch given that the total quantity of them is like 12 or so and that could be within 24 ports.

Then for future expansion, the same core model could be bought again and then uplinked.

Final specification

Metal

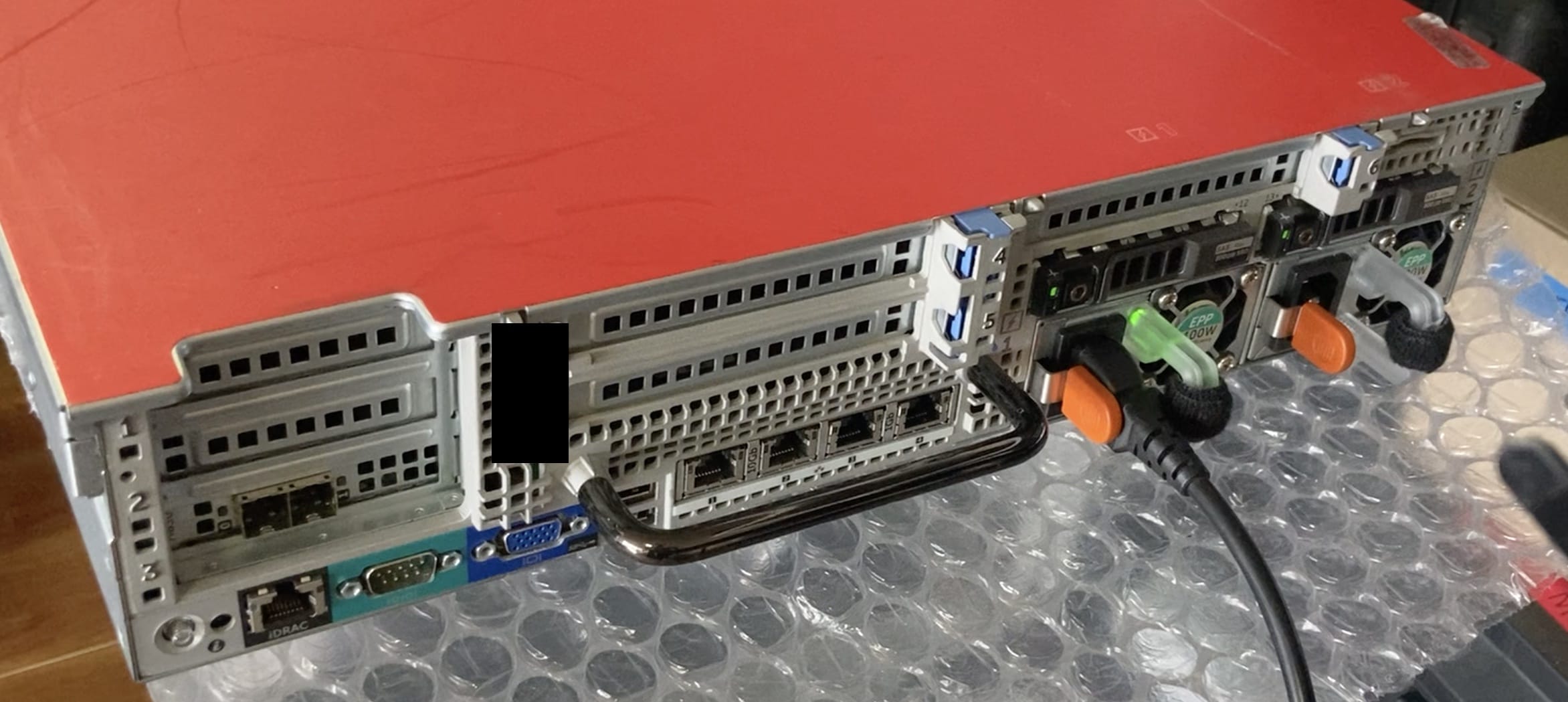

System: Dell PowerEdge R730XD with iDRAC 8

CPU: 2x Intel Xeon E5-2667 v4 @ 3.20GHz

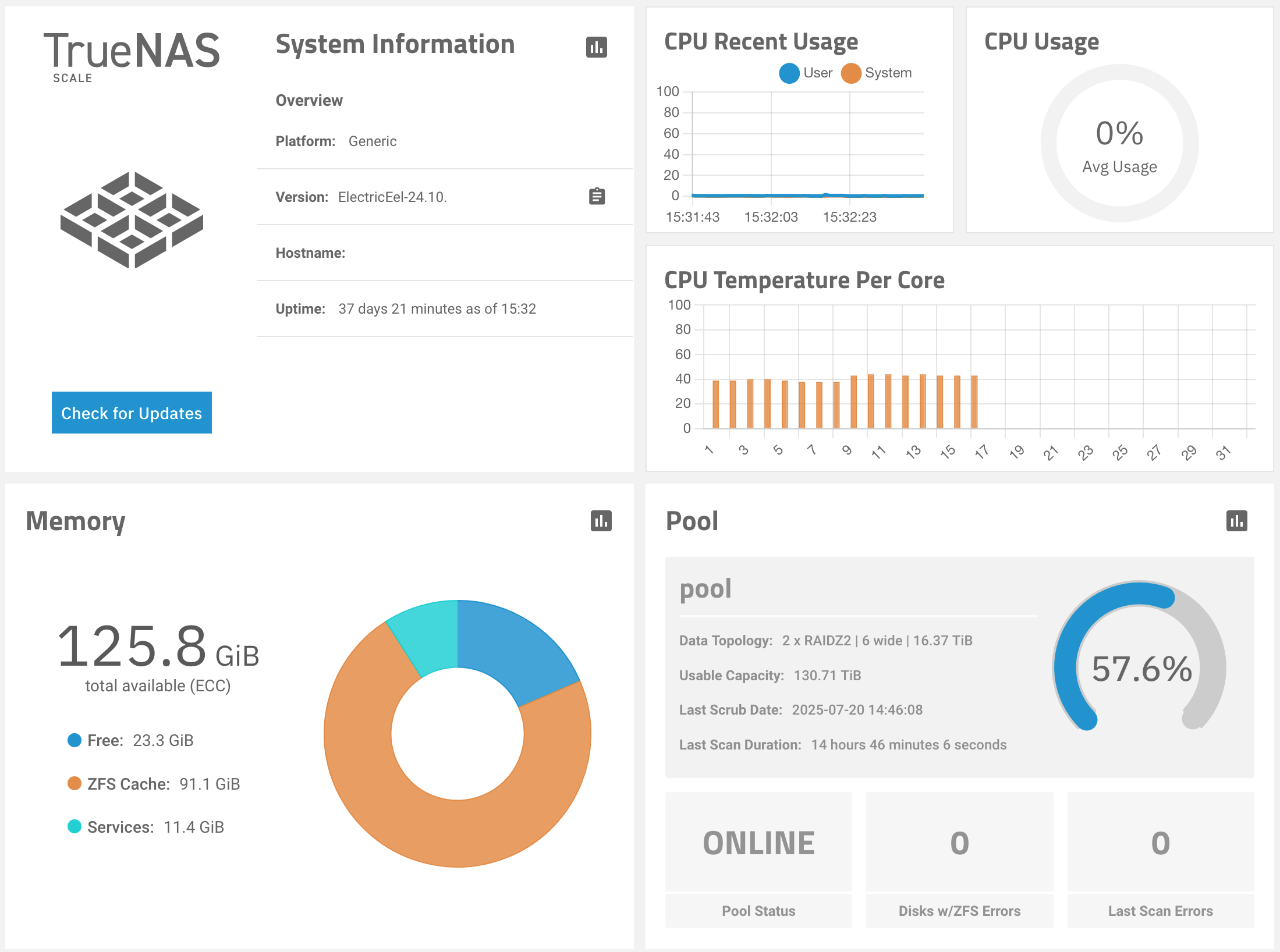

RAM: 128 GB RDIMM DDR4 ECC

NIC: Dell NIC with 2x G and 2x 10 GBASE-T

Disks: 2x Hitachi HUSMM1680ASS204 800GB SAS (OS)

8x WD WUH721818AL5204 18TB SAS

6x Seagate EXOS ST18000NM004J 18TB SAS

= 216 TB raw

Storage: RAIDZ2 of 2 vdevs (6 disks)

= 130 TiB usable

OS: TrueNAS SCALE 24.10

Network

Access Switch: Cisco SG200-26 (26x GBASE-T)

Core Switch: Mikrotik CRS312-4C+8XG-RM (8x 10GBASE-T & 4x Combo/SFP+)

Build

Let’s assemble.

Here are some pics during an earlier stage where the network backbone (Mikrotik + Cisco) was implemented first.

Then the PowerEdge server build:

And the resulting TrueNAS system after configuration and data migration:

Deployment

The initial data migration is rsync transfers from NAS1 (QNAP TS-873) to NAS2 (new system) in stages, using rsyncd and rsync:// protocol.

Config, flags, file list, inclusions/exclusions and so on are stored in a shell script migrate.sh and executed in a tmux instance.

Since the team is still using NAS1, I configure an automatic ‘top up’ with a nightly sync to NAS2, monitoring it closely.

I swap most team members’ desktops to the new IP address for NAS2 and update our internal docs for future reference.

The primary client connection method is macOS Finder’s in-built UI for “Connect to Server” and inserting the storage hostname as URL with smb://, and saving to favourites.

I’m pleased to move away from any Bonjour or mDNS based discovery connection methods, or proprietary app connection methods like QNAP QFinder.

That’s largely the extent of the deployment! :) No major headaches.

Some hurdles crossed:

-

Some macOS clients have their service order jumbled depending on other devices being used. LG monitors can even present themselves as a NIC in the adapters list in System Settings > Network. In this environment, Wi-Fi is first in the service order, then USB 2.5G NIC.

-

When macOS clients have Wi-Fi (though it could be any adapter other than that which carries the company internal network) as first in the service order, the WiFi adapter becomes the primary adapter and DNS queries are always resolved first to the DNS addresses on that adapter. Even if the 2.5G NIC receives a DHCP offer which includes a namespace like

company.com, macOS won’t resolve local resources at *.company.com! The routing for that adapter still works, so connecting via explicit IPV4 address works and is the method.A better implementation would be to make sure that a company interface is the first in the macOS networking stack, whether that’s WiFi or wired. In this case, that would be a company WiFi SSID. If a facility WiFI is used (not part of our company but available at the site for general internet use), that WiFi ‘slot’ takes the first position and determines DNS.

Performance monitoring

I was pretty happy with the performance during testing. Some 500~700 MB/s raw write speed to the pool and plenty fast read, from FIO benchmarks, which I haven’t documented here.

I have node-, smartctl-, zfs- and IPMI- exporters running to a Prometheus instance.

TrueNAS SCALE also provides an exporter to Graphite but the data seems like much the same as what I am getting from those exporters already, so there is little desire to manage Graphite in the stack as well.

I am curious about ZFS hit rate, so I will deep dive into Grafana and see what that looks like.

The remarks from the users were pretty positive and didn’t indicate any performance issues, which is great.

Support justification

I know what an informed reader might be thinking.

This is a bespoke solution which shaves away dollars from certain areas (software cost, support cost) and introduces new costs and risks at different points.

For example, with the TrueNAS instllation for example, the variant is the community edition which precludes any formal software support from the developer TrueNAS (formerly known iX Systems), apart from their user forum.

However, acting as a micro MSP, I provide these services myself and have a long working relationship with the client.

If I am skilled in server hardware, bare metal OS management, software and client connection protocols, and networking, as a service provider, I can cover their needs and the problems that might arise and negotiate with any vendors or upstream developers when required.

If I watch update release cycles, mailing lists and forums regularly, I can keep abreast of the state of the technology being deployed.

This means that in this case, the client is well looked after by at least one entity, one who can touch a wider scope of responsibilities than perhaps individual vendor support contracts could.

Some things are not the best on paper, but they can be good enough for now.

(Even if now always starts out as temporary, then becomes de-facto, then becomes what-we’ve-always-done, becomes permanent.)

Comments

Thanks for reading!

Commenting is provided through Giscus. Giscus is an open source comment system with no tracking or analytics, aside from OAuth via GitHub. To comment, you just need to sign in to GitHub and authorise Giscus once.